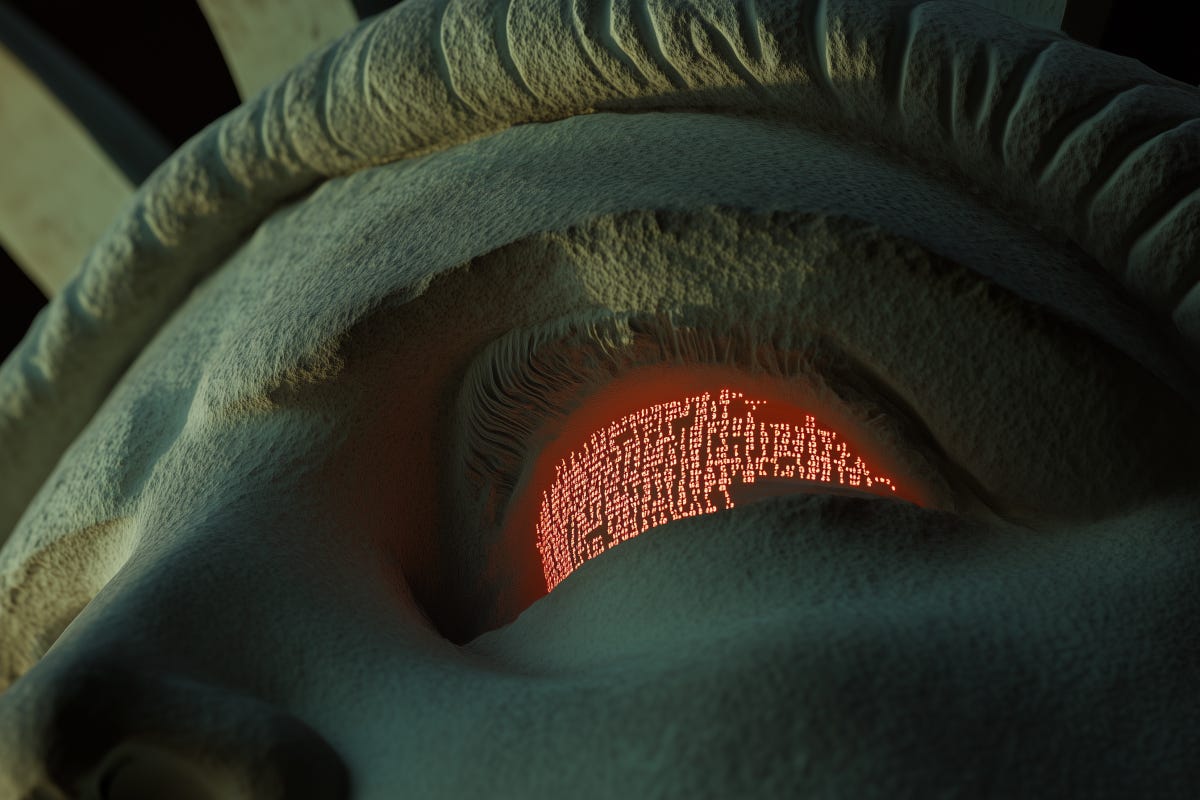

AI in a Time of Democratic Crisis

It can be hard to keep two thoughts simultaneously in one’s head. We are in the midst of a genuine technological revolution, likely to radically outpace any previous comparator. And we are also in the middle of a radical geopolitical realignment that could ultimately match the scale of the treaty of Westphalia, or the post-World War Two international settlement. Each of these trends matters tremendously. They are both accelerating. And they are interacting in real time: how each goes is likely to define the other. I have sometimes invoked Yeats’ ‘The Second Coming’, a little ironically, to describe a millenarian attitude among AI researchers that has in the past seemed premature. But it feels deeply apposite now. Some rough beasts are about to be born, and the falcon definitely cannot hear the falconer.

For multidisciplinary perspectives on the widening gyre, you’re all invited to a symposium on AI and Democratic Freedoms that I’m organising with the Knight 1st Amendment Institute in New York (and online) on April 10-11. I thought it might be a bit on the nose when we first planned it; I couldn’t have known how much so—or the degree to which Columbia University itself would be in the middle of a firestorm of assaults on academic freedom and free speech (for the Knight 1A Institute’s statements on these issues, see here). The symposium features leaders and rising stars from across AI safety and governance, and epitomises the sociotechnical approach to AI safety that I called for, with Alondra Nelson, back in 2023 (on that, see also an exciting CHI workshop on sociotechnical AI governance below). Papers from the symposium will be released starting in April.

And yep, there’s so much interesting work across philosophy of AI and computing these days that we’re dropping the ‘normative’ qualifier. It’s just the philosophy of computing newsletter now.

As always, we welcome your contributions for future issues – send relevant events, papers, or opportunities to mint@anu.edu.au.

- Seth

March Highlights

• Opportunities: Several summer research positions and fellowships have upcoming deadlines. The Ethics Institute Summer Research Internship (deadline March 31) offers PhD students and recent graduates a chance to work on AI evaluation and governance. The AISI Challenge Fund and Diverse Intelligences Summer Institute are both accepting rolling applications from March. Heads up: MINT is going to have an undergraduate research intern opportunity coming up soon too.

• New Papers: The philosophy of AI and computing continues to advance—look out in particular for Brett Karlan and Henrik Kugelberg’s forthcoming paper in Philosophy and Phenomenological Research. More on the AI safety side, the MINT team is particularly interested in Séb Krier co-authors’ approach to sustaining the liberal society under AGI (they’ll be discussing this at the Knight Institute symposium mentioned above).

• Events: Besides AI and Democratic Freedoms, look out for upcoming events include a Workshop on Advancing Fairness in Machine Learning (April 9-10) at the University of Kansas and the Workshop on Bidirectional Human-AI Alignment (April 27) at ICLR. The upcoming conference on large-scale AI risks at Leuven also looks good.

Events:

Artificial Intelligence and Democratic Freedoms

Date: April 10-11, 2025

Location: Lee C. Bollinger Forum, Columbia University, New York, United States

Link: https://knightcolumbia.org/events/artificial-intelligence-and-democratic-freedoms

Hosted by the Knight First Amendment Institute in collaboration with Columbia Engineering, this symposium examines the risks advanced AI systems pose to democratic freedoms and explores potential mitigating interventions. The event features seven panel discussions with leading scholars and technologists addressing AI regulation, AI agents' societal impacts, technical safety approaches, AI's role in enhancing democracy, AI in the digital public sphere, tensions between AI safety and freedom of expression, and preparation for transformative AI. Keynote speakers include Ben Buchanan (Johns Hopkins) and Erica Chenoweth (Harvard).

Workshop on Advancing Fairness in Machine Learning

Date: April 9-10, 2025

Location: Center for Cyber Social Dynamics, University of Kansas, Lawrence, United States

Link: https://philevents.org/event/show/130478

Hosted by the Center for Cyber Social Dynamics, this multidisciplinary workshop aims to foster dialogue on fairness in machine learning across technical, legal, social, and philosophical domains. Topics include algorithmic bias, fairness metrics, ethical foundations, real-world applications, and legal frameworks.

Workshop on Bidirectional Human-AI Alignment

Date: April 27, 2025 (ICLR Workshop, Hybrid)

Location: Hybrid (In-person & Virtual)

Link: https://bialign-workshop.github.io/#/

This interdisciplinary workshop redefines the challenge of human-AI alignment by emphasizing a bidirectional approach—not only aligning AI with human specifications but also empowering humans to critically engage with AI systems. Featuring research from Machine Learning (ML), Human-Computer Interaction (HCI), Natural Language Processing (NLP), and related fields, the workshop explores dynamic, evolving interactions between humans and AI.

International Conference on Large-Scale AI Risks

Date: May 26-28, 2025

Location: KU Leuven, Belgium

Link: https://www.kuleuven.be/ethics-kuleuven/chair-ai/conference-ai-risks

Hosted by KU Leuven, this conference focuses on exploring and mitigating the risks posed by large-scale AI systems. It brings together experts in AI safety, governance, and ethics to discuss emerging challenges and policy frameworks.

1st Workshop on Sociotechnical AI Governance (STAIG@CHI 2025)

Date: April 27, 2025

Location: Yokohama, Japan

Link: https://chi-staig.github.io/

STAIG@CHI 2025 aims to build a community that tackles AI governance from a sociotechnical perspective, bringing together researchers and practitioners to drive actionable strategies.

ACM Conference on Fairness, Accountability, and Transparency (FAccT 2025)

Date: June 23-26, 2025

Location: Athens, Greece

Link: https://facctconference.org/2025/

FAccT is a premier interdisciplinary conference dedicated to the study of responsible computing. The 2025 edition in Athens will bring together researchers across fields—philosophy, law, technical AI, social sciences—to advance the goals of fairness, accountability, and transparency in computing systems.

Opportunities

CFP: European Workshop on Algorithmic Fairness (EWAF'25)

Location: Europe (Hybrid options may be available)

Link: https://2025.ewaf.org/submitting/call-for-interactive-sessions

Deadline: March 27, 2025 (23:59, AoE)

The fourth European Workshop on Algorithmic Fairness is accepting proposals for interactive sessions that address fairness in AI within the European context. EWAF seeks contributions that bridge gaps between theory and practice, examine European institutions' impact on ethical AI, and foster interdisciplinary conversations between diverse stakeholders. Formats include workshops, panels, unconferences, artistic interventions, and other interactive formats. Themes include AI regulation in Europe, discrimination in European contexts, data privacy, AI's impact on public services, and community-led approaches to AI design and deployment. The workshop particularly values contributions demonstrating practical applications and real-world case studies.

CFP: Artificial Intelligence and Collective Agency

Dates: July 3–4, 2025

Location: Institute for Ethics in AI, Oxford University (Online & In-Person)

Link: https://philevents.org/event/show/132182?ref=email

Deadline: March 27, 2025

The Artificial Intelligence and Collective Agency workshop explores philosophical and interdisciplinary perspectives on AI and group agency. Topics include analogies between AI and corporate or state entities, responsibility gaps, and the role of AI in collective decision-making. Open to researchers in philosophy, business ethics, law, and computer science, as well as policy and industry professionals. Preference for early-career scholars.

CFP: 2nd Dortmund Conference on Philosophy and Society 2025

Location: Dortmund, Germany

Deadline: May 31, 2025

The Department of Philosophy and Political Science at TU Dortmund University, in partnership with the Lamarr Institute for Machine Learning and Artificial Intelligence, invites paper submissions for the 2nd Dortmund Conference on Philosophy and Society. Scheduled for October 1–2, 2025, the conference will feature keynote speaker Kate Vredenburgh (LSE). The first day will host up to six presentations with interactive discussions and a public evening lecture by Vredenburgh, while the second day offers a student workshop exploring her research. Contributions addressing topics such as explainable AI, algorithmic bias, the right to explanation, and the impact of AI on work and institutions are especially welcome.

Grant: AISI Challenge Fund 2025

Link: https://www.aisi.gov.uk/grants#challenge-fund

Deadline: Rolling from March 5, 2025

The AI Security Institute (AISI) is offering grant funding of up to £200,000 per project for cutting-edge research in AI safety and security. The Challenge Fund supports research addressing AI risks, including cyber-attacks, AI misuse, and systemic vulnerabilities. Eligible researchers from UK and international academic institutions and non-profits are encouraged to apply. Applications close on March 5, 2025.

Training: Diverse Intelligences Summer Institute 2025

Location: St Andrews, Scotland

Link: https://disi.org/apply/

Deadline: Rolling from March 1, 2025

The Diverse Intelligences Summer Institute (DISI) invites applications for their summer 2025 program, running July 6-27. The Fellows Program seeks scholars from fields including biology, anthropology, AI, cognitive science, computer science, and philosophy for interdisciplinary research. Applications reviewed on a rolling basis starting March 1.

Training: Cooperative AI Summer School 2025

Date: July 9–13, 2025

Location: Marlow, near London

Link: https://www.cooperativeai.com/summer-school/summer-school-2025

Deadline: March 7, 2025

Applications are now open for the Cooperative AI Summer School, designed for students and early-career professionals in AI, computer science, social sciences, and related fields. This program offers a unique opportunity to engage with leading researchers and peers on topics at the intersection of AI and cooperation.

Training: Intro to Transformative AI 5-Day Course

Location: Remote

Link: https://bluedot.org/intro-to-tai

Deadline: Rolling (Next cohorts: March 17-21, 24-28)

BlueDot Impact offers an intensive course on transformative AI fundamentals and implications. The program features expert-facilitated group discussions and curated materials over 5 days, requiring 15 hours total commitment. Participants join small discussion groups to explore AI safety concepts. No technical background needed. The course is free with optional donations and includes a completion certificate.

Jobs

Ethics Institute 2025 Summer Research Internship

Location: Boston, MA (in-person for at least 50% of the internship)

Link: Full details and application

Deadline: March 31, 2025

Prof. Sina Fazelpour is inviting applications for 12-week Research Internship positions during Summer 2025 (June–August or July–September). Interns will collaborate on developing concepts, methodologies, or frameworks to enhance AI evaluation and governance. This position is open to current PhD students and recent PhD graduates with a demonstrated interest in AI ethics and governance. Applicants from diverse fields—including philosophy, cognitive science, computer science, statistics, human-computer interaction, network science, and science & technology studies—are encouraged to apply.

Post-doctoral Researcher Positions (3)

Location: New York University | New York, NY

Link: https://philjobs.org/job/show/28878

Deadline: Rolling basis

NYU's Department of Philosophy and Center for Mind, Brain, and Consciousness is seeking up to three postdoctoral or research scientist positions specializing in philosophy of AI and philosophy of mind, beginning September 2025. These research-focused roles (no teaching duties) will support Professor David Chalmers' projects on artificial consciousness and related topics. Post-Doctoral positions require PhDs earned between September 2020-August 2025, while Research Scientist positions are for those with PhDs from September 2015-August 2020. Both positions offer a $62,500 annual base salary. Applications including CV, writing samples, research statement, and references must be submitted by March 30th, 2025 via Interfolio.

Sloan Foundation Metascience and AI Postdoctoral Fellowship

Location: Various eligible institutions (US/Canada preferred)

Link: https://sloan.org/programs/digital-technology/aipostdoc-rfp

Deadline: April 10, 2025, 5:00pm ET

Two-year postdoctoral fellowship ($250,000 total) for social sciences and humanities researchers studying AI's implications for science and research. Fellows must have completed PhD by start date and not hold a permanent/tenure-track position. Research focuses on how AI is changing research practices, epistemic/ethical implications, and policy responses. Key areas include AI's impact on scientific methods, research pace, explainability, and human-AI collaboration in science. Includes fully-funded 2026 summer school. Application requires research vision statement, approach description, career development plan, CV, mentor support letter, and budget. UK-based applicants should apply through parallel UKRI program.

Post-doctoral Researcher Positions (2)

Location: Trinity College Dublin, Ireland

Email: https://aial.ie/pages/hiring/post-doc-researcher/

Deadline: Rolling basis

The AI Accountability Lab (AIAL) is seeking two full-time post-doctoral fellows for a 2-year term to work with Dr. Abeba Birhane on policy translation and AI evaluation. The policy translation role focuses on investigating regulatory loopholes and producing policy insights, while the AI evaluation position involves designing and executing audits of AI systems for bias and harm. Candidates should submit a letter of motivation, CV, and representative work.

Papers

No Right to an Explanation: Why Algorithmic Decision-Making Doesn't Require Explanations

Authors: Brett Karlan and Henrik Kugelberg | Philosophy and Phenomenological Research (forthcoming)

Karlan and Kugelberg argue against a "right to an explanation" for algorithmic decisions. They examine several justifications for such a right—from informed self-advocacy to democratic legitimacy—and conclude that in most cases, explanations are either impossible to provide, unnecessarily costly, or superfluous to securing the relevant normative goods. The authors contend that contestability of decisions, not their explainability, should be our primary concern. They further argue that singling out algorithmic decision-making as requiring special explanatory standards creates an unmotivated double standard compared to other areas of science policy where opacity is accepted. Their analysis suggests that while explanations may sometimes be valuable, embedding this as a right leads to suboptimal policy outcomes.

The Epistemic Cost of Opacity: How the Use of Artificial Intelligence Undermines the Knowledge of Medical Doctors in High-Stakes Contexts

Authors: Eva Schmidt, Paul Martin Putora, & Rianne Fijten | Philosophy and Technology

Schmidt, Putora, and Fijten contend that in high-stakes medical contexts, the inherent opacity of AI systems can undermine doctors’ knowledge—even when the systems are statistically reliable. By examining a case of cancer risk prediction, they show that doctors may arrive at true beliefs merely by luck, failing a crucial safety condition for genuine knowledge. Their analysis highlights epistemic risks with implications for clinical decision-making, moral responsibility, and the integrity of informed consent.

Keep the Future Human: Why and How We Should Close the Gates to AGI and Superintelligence, and What We Should Build Instead

Author: Anthony Aguirre | Preprint

This preprint contends that in an era of rapidly advancing AI, it is crucial to restrict the development of autonomous, superhuman systems. Aguirre argues that rather than pursuing ever-more powerful AGI, research should prioritize building trustworthy AI tools that empower individuals and strengthen human capacities for a more beneficial societal transformation.

Are Biological Systems More Intelligent Than Artificial Intelligence?

Author: Michael Timothy Bennett | Preprint

Bennett develops a mathematical framework for causal learning to show that the dynamic, decentralized control inherent in biology enables more efficient adaptation than the rigid, top-down structures typical in artificial systems. The work provides insights into designing more robust and adaptive technologies.

AGI, Governments, and Free Societies

Authors: Justin B. Bullock, Samuel Hammond, Seb Krier | Preprint

Drawing on the ‘narrow corridor’ framework, the authors explore how Artificial General Intelligence (AGI) might drive societies toward authoritarian surveillance or, alternatively, erode state legitimacy. They advocate for a governance framework that incorporates technical safeguards, adaptive regulation, and hybrid institutional designs to ensure that technological progress supports rather than undermines democratic freedoms.

Authorship and ChatGPT: a Conservative View

Authors: René van Woudenberg, Chris Ranalli, Daniel Bracker | Philosophy and Technology

The authors argue that despite its human-like text generation, ChatGPT lacks the intentionality, responsibility, and mental states required for true authorship. By contrasting liberal, conservative, and moderate views on AI agency, the paper ultimately defends a conservative stance that preserves a clear distinction between human creativity and machine output.

Towards a Theory of AI Personhood

Author: Francis Rhys Ward | Preprint

Ward reviews evidence from contemporary machine learning research to assess whether current AI models meet these conditions, finding the results inconclusive. The paper raises significant ethical questions regarding the treatment and control of AI, suggesting that ascribing personhood to machines could challenge existing frameworks of regulation and alignment.

Better Feeds: Algorithms That Put People First

Authors: Alex Moehring, Alissa Cooper, Arvind Narayanan, Aviv Ovadya, Elissa Redmiles, Jeff Allen, Jonathan Stray, Julia Kamin, Leif Sigerson, Luke Thorburn, Matt Motyl, Motahhare Eslami, Nadine Farid Johnson, Nathaniel Lubin, Ravi Iyer, Zander Arnao | Knight-Georgetown Institute

This report offers an in-depth analysis of modern recommender systems, critiquing their focus on short-term engagement metrics like clicks and likes. Developed by the KGI Expert Working Group, it provides comprehensive policy guidance aimed at redesigning these algorithms to prioritize long-term user value and richer interactions. The report outlines actionable solutions for policymakers and product designers to shift toward systems that enhance overall user well-being.

Preparing for the Intelligence Explosion

Authors: Fin Moorhouse and Will MacAskill | Preprint

A guide to AGI preparedness considering grand challenges posed by the intelligence explosion, which challenges can be deferred to be dealt with later, and what action can be taken now.

Links

OpenAI discusses safety and alignment, discusses a much more expensive tier for next-generation agents, and submits a policy proposal to the US government, linking fair-use directly to national security. In other financial news, Scale AI wins major DOD contract and Anthropic raised $3.5 billion at a $61.5 billion post-money valuation.

In model news, Google releases Gemma 3 and an API feature for Gemini that lets you pass YouTube videos directly into context. Cohere’s new model, Command A, emerges as a minimal compute, open-source competitor to GPT-4o and DeepSeek-V3, and Epoch AI reports on trends of falling inference prices for premium LLMs. Baidu releases two new models as the Chinese government tightens its grip on DeepSeek. In hardware news, Figure introduces BotQ, a factory to build 12,000 humanoid robots per annum (for starters). In general, humanoid robotics is advancing in leaps and bounds.

Need a fairly technical rundown of recent work on reasoning models? Sebastian Raschka’s The State of Reasoning Models is a good place to get your bearings. Take a look at Jan Kulveit’s day-after-christmas post on LLM Psychology for character-trained models for a little refresher after that.

Intro, Highlights and Links by Seth Lazar with editorial support from Cameron Pattison, Events, Opportunities, and Paper Summaries by Cameron Pattison with curation by Seth; additional link-hunting support from the MINT Lab team.